- Published on September 12, 2025

- In AI Features

Why Responsible AI Demands Both Trust and Compute Ownership

📣 Want to advertise in AIM? Book here

In a live demonstration for AIM, Posha prepared paneer tikka masala in approximately 25 minutes

“Knowledge-driven components are important because we don’t want everything to be just algorithmic innovation.”

As businesses recognise the potential of voice-driven tech, Pradhi AI is laying the foundation for an empathetic, responsive AI ecosystem.

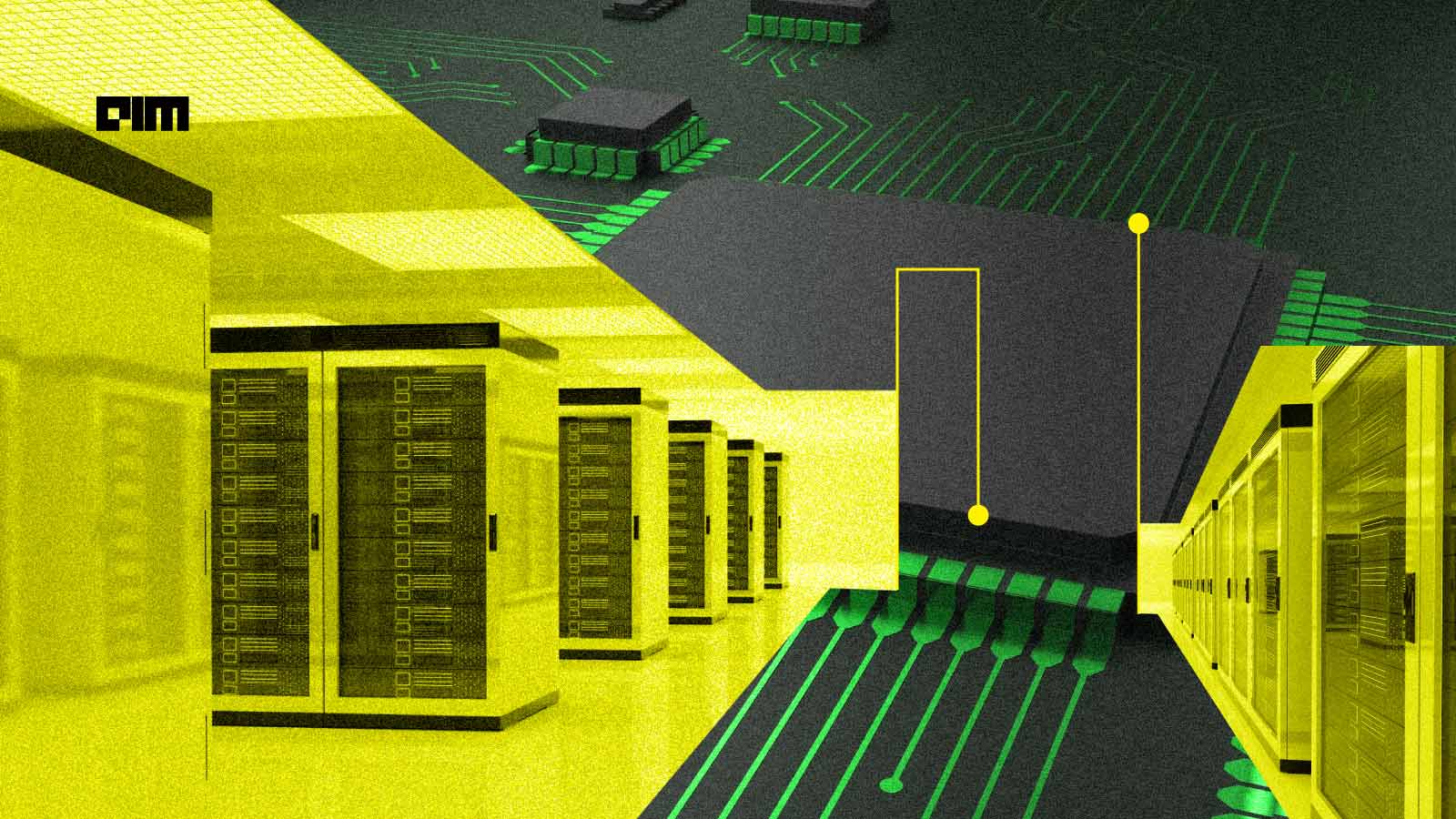

“The coastal city could showcase tangible results by applying deep tech to areas it already dominates”

The company’s new AI image and photo editor deepens concerns over data use and consent gaps, experts warn.

The consortium insists sovereignty doesn’t mean shutting the door on global players.

“Democracy would not have been possible without storytelling being distributed.”

Natural gas may seem a potential fuel for data centres, but higher costs and insufficient infrastructure pose challenges.